Log Manager v10

You can use the PEM Log Manager to simplify server log configuration for Postgres instances. With Log Manager, you can modify all of your server log parameters including:

- Where log files are written

- How often log files are written

- The type of information written to log files

- The format of log file entries

- Log rotation properties

Before using Log Manager to define logging properties for a server, you must specify the name of the associated Advanced Server or PostgreSQL database server in the Service ID field on the Advanced tab of the New Server Registration or Properties dialog box. The server is available for configuration on the Server Selection dialog box only if you specify the name of the service in the Service ID field.

For example, suppose you're setting logging preferences for an Advanced Server 9.4 instance that resides on a Linux host. Set the Service ID field on the Advanced tab of the Properties dialog box for the monitored server to ppas-9.4.

Note

- Log Manager depends on Settings and Server Log Configuration probes to populate all the fields in the wizard. Therefore, ensure that those probes for selected servers are enabled. In addition, set the execution frequency for those probes to a minimum to ensure that Log Manager reflects the latest log configurations.

- Rerun Log Manager if you make any manual changes related to logging in the configuration files, such as

postgresql.conf. PEM doesn't reflect those changes automatically.

To configure logging for a Postgres instance, you must register the server as a PEM-managed server, and the registration information must include the name of a service script.

To open Log Manager, in the PEM client, select Management > Log Manager. The wizard opens and welcome message appears. Select Next.

The Server Selection dialog box displays a list of the server connections monitored by PEM. Select the check boxes next to the names of servers to which you want the Log Manager wizard to apply the specified configuration. Log Manager is disabled for any server displaying a red exclamation mark to the left of its name in the Server selection tree. A server might not be enabled for several reasons:

Log Manager can configure only a server that specifies a service ID on the Advanced tab of the Properties dialog box. To provide a service ID:

- In the tree, right-click the server name and select Disconnect Server from the context menu.

- If prompted, provide a password.

- From the context menu for the server, select Properties.

- On the Advanced tab, enter the name of the service in the Service ID field.

- Select Save.

If the PEM agent bound to the server doesn't have enough privileges to restart the server, the server is disabled.

If the PEM agent bound to the server is an older version than the associated PEM server, the server is disabled.

Select Next.

Use the Log configuration dialog box to specify how often to import log files to PEM and to specify log rotation details.

Options in the Import Logs box specify how often to import the log files to PEM:

- Use the switch next to Import logs to PEM to specify whether to import the log files to PEM and to display them on the Server Log Analysis dashboard.

- Use the Import Frequency list to specify how often to import log files to PEM.

Use the Log rotation configuration box to specify the maximum length (lifespan or size) of a log file:

- Use the Rotation Size field to specify the maximum size of a log file in megabytes. The default value is 10 MB. Set to 0 if you don't want to place a limit on the maximum size of a log file.

- Use the Rotation Time field to specify the number of whole days to store in each log file. The default value is 1 day.

Use the Truncation on Rotation switch to specify server behavior for time-based log file rotation:

- Select On to overwrite any existing log file that has the same name as a new file.

- Select Off to append any new log file entries to an existing log file with the same name as a new log file. This is the default behavior.

Select Next.

Use the Where to Log dialog box to specify where to write log files:

Select an option from the Log Destination box to specify a destination for the server log output:

- Set the stderr switch to Yes to write log files to stderr.

- Set the csvlog switch to Yes to write log files in a comma-separated value format. This option is enabled and not editable if you select Import logs to PEM on the Schedule dialog box. If you aren't importing server log files to PEM, you can edit this option.

- Set the syslog switch to Yes to write log files to the system log files.

- On Windows, set the eventlog switch to Yes to write log files to the event log.

Use the Log collection box to specify your collection preferences:

- Set the Log Collector switch to Enable to redirect captured log messages (directed to stderr) into log files.

- Set the Log Silent Mode switch to Enable to run the server silently in the background, disassociated from the controlling terminal.

Use the Log Directory box to specify log file location preferences:

- Set the Change log directory for selected servers? switch to Yes to maintain each set of log files in a separate directory.

- Use the Directory name field to specify the directory to which to write log files. The directory resides beneath the

pg_logdirectory under the installation directory of the monitored server.

Use the Log File Name field to specify a format for the log file name. If set to Default, the format is

enterprisedb-%Y-%m-%d_%H%M%S, where:enterprisedbis the file name prefixYis the year that the log was storedmis the month that the log was storedis the day that the log was storedHis the hour that the log was storedMis the minute that the log was storeSis the second that the log was stored

When logging to syslog is enabled:

- Use the Syslog Facility list to specify the syslog facility to use.

- Use the Syslog Ident field to specify the program name that identifies EDB Postgres Advanced Server entries in system logs.

Select Next.

Use the When to Log dialog box to specify the events that initiate a log file entry.

The severity levels in order of severity, from most severe to least severe, are:

- panic — Errors that cause all database sessions to abort.

- fatal — Errors that cause a session to abort.

- log — Information messages of interest to administrators.

- error — Errors that cause a command to abort.

- warning — Error conditions in which a command completes but might not perform as expected.

- notice — Items of interest to users. This is the default.

- info — Information implicitly requested by the user.

- debug5 through debug1 — Detailed debugging information useful to developers.

Use the Client min messages list to specify the lowest severity level of message sent to the client application.

Use the Log min messages list to specify the lowest severity level to write to the server log.

By default, when an error message is written to the server log, the text of the SQL statement that initiated the log entry isn't included. Use the Log min error statement list to specify a severity level that triggers SQL statement logging. If a message is of the specified severity or higher, the SQL statement that produced the message is written to the server log.

Use the Log min duration statement list to specify a statement duration, in milliseconds. Any statements that exceed the specified number of milliseconds are written to the server log. A value of -1 disables all duration-based logging. A value of 0 logs all statements and their duration.

Use the Log temp files field to specify a file size, in kilobytes. When a temporary file reaches the specified size, it's logged. Specify a value of -1 (the default) to disable this functionality.

Use the Log autoVacuum min duration field to specify a time length, in milliseconds. If auto-vacuuming exceeds the length of time specified, the activity is logged. Specify a value of -1 (the default) to disable this functionality.

Select Next.

Use the What to Log dialog box to specify log entry options that are useful for debugging and auditing.

Use the switches in the Debug options box to include information in the log files related to query execution that might be of interest to a developer:

- Set the Parse tree switch to Yes to include the parse tree in the log file.

- Set the Rewriter output switch to Yes to include query rewriter output in the log file.

- Set the Execution plan switch to Yes to include the execution plan for each executed query in the log file.

When the Indent Debug Options Output in Log switch is set to Yes, the server indents each line that contains a parse tree entry, a query rewriter entry, or query execution plan entry. While indentation makes the resulting log file more readable, it results in a longer log file.

Use the switches in the General Options box to include auditing information in the log file:

- Set the Checkpoints switch to Yes to include checkpoints and restartpoints in the server log.

- Set the Connections switch to Yes to include each attempted connection to the server as well as successfully authenticated connections in the server log.

- Set the Disconnections switch to Yes to include a server log entry for each terminated session that provides the session information and session duration.

- Set the Duration switch to Yes to include the amount of time required to execute each logged statement in the server log.

- Set the Hostname switch to Yes to include both the IP address and host name in each server log entry. By default, only the IP address is logged. This might cause a performance penalty.

- Set the Lock Waits switch to Yes to write a log entry for any session that waits longer than the time specified in the

deadlock_timeoutparameter to acquire a lock. This is useful when trying to determine if lock waits are the cause of poor performance.

Use the Error verbosity list to specify the detail written to each entry in the server log:

- Select default to include the error message, DETAIL, HINT, QUERY, and CONTEXT in each server log entry.

- Select terse to log only the error message.

- Select verbose to include the error message, the DETAIL, HINT, QUERY, and CONTEXT error information, SQLSTATE error code and source code file name, the function name, and the line number that generated the error.

Use the Prefix string field to specify a printf-style string that is written at the beginning of each log file entry. For information about the options supported, see the log_line_prefix documentation in the Postgres core documentation.

Use the Statements list to specify the SQL statements to include in the server log:

- Specify none (the default) to disable logging of SQL statements.

- Specify ddl to instruct to log ddl (data definition language) statements, such as CREATE, ALTER, and DROP.

- Specify mod to log all ddl statements as well as all dml (data modification language) statements, such as INSERT, UPDATE, DELETE, TRUNCATE, and COPY FROM.

- Specify all to log all SQL statements.

Select Next.

Use the Schedule Logging Changes dialog box to specify when logging applies configuration changes:

- Set the Configure logging now switch to Yes to enable your configuration preferences. The server restarts when you complete the Log Manager wizard.

- Set Configure logging now to No to use the Schedule it for some other time calendar selector to specify a convenient time to apply logging configuration preferences and for the server to restart.

When you apply the configuration changes specified by the Log Manager wizard, the server restarts, temporarily interrupting use of the database server for users.

Select Finish to exit the wizard. Either restart the server or schedule the server restart for the time specified on the scheduling dialog box.

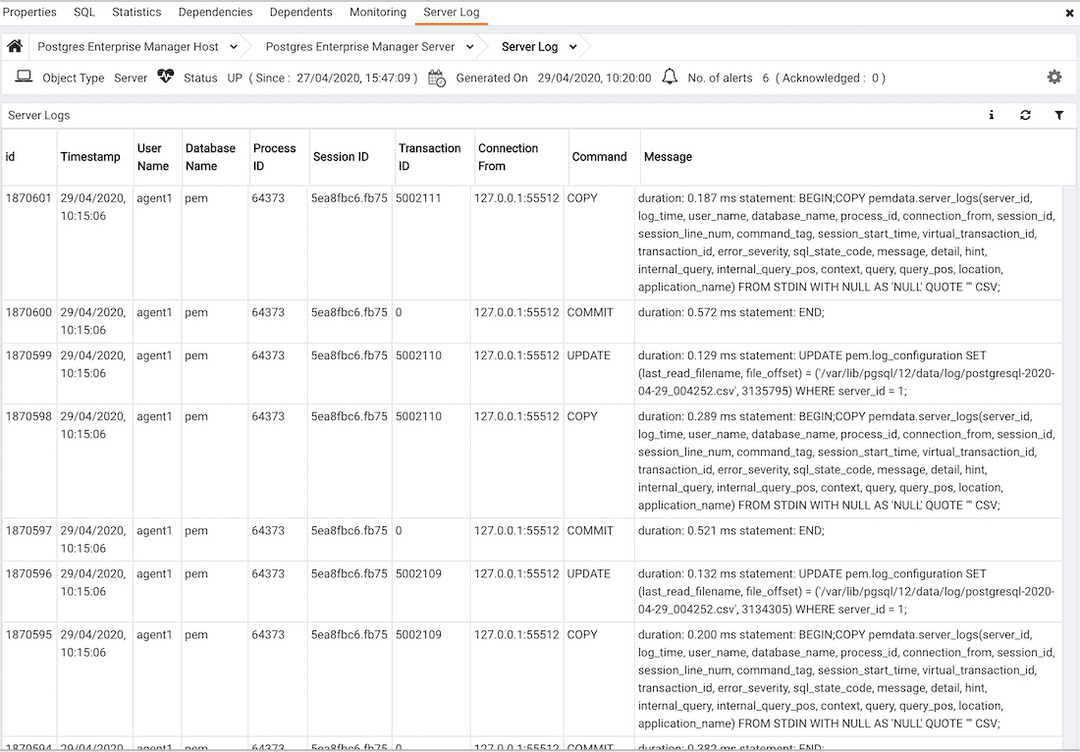

Reviewing the Server Log Analysis dashboard

After invoking the Log Manager wizard and importing your log files to PEM, you can use the Server Log Analysis dashboard to review the log files for a selected server. To open the Server Log Analysis dashboard, right-click the name of a monitored server in the PEM client tree and select Dashboards > Server Log Analysis.

The header information on the Server Log Analysis dashboard displays the date and time that the server was started, the date and time that the page was last updated, and the current number of triggered alerts.

Entries in the Server Log table appear in chronological order, with the most recent log entries first. Use the scroll bars to navigate through the log entries or to view columns that are off of the display.

Headings at the top of the server log table identify the information stored in each column. Hover over a column heading to view a tooltip that contains a description of the content of each column.

You can use filtering to limit the number of server log records that are displayed. Select Show Filters to open the filters panel and define a filter. Use the filter definition box to describe the selection criteria for selecting a subset of a report to display:

- Use the From field to specify a starting date for the displayed server log.

- Use the To field to specify an ending date for the displayed server log.

- Enter a role name in the Username field to display transactions performed only by that user.

- Enter a database name in the Database field to limit the displayed records to transactions that were performed against the specified database.

- Use the Command Type field to specify selection criteria for the commands that appear in the filtered report.

After you specify the criteria for filtering the server logs, select Filter to display the filtered server log in the Server Log table.

- On this page

- Reviewing the Server Log Analysis dashboard